Rethinking RAG in the Era of Long-Context LLMs, A Lightweight Framework for Combining Domain Experts, and More!

Vol.68 for Sep 02 - Sep 08, 2024

Stay Ahead of the Curve with the Latest Advancements and Discoveries in Information Retrieval.

This week’s newsletter highlights the following research:

Retrieval-Augmented Generation for Superior Long-Context Question Answering, from NVIDIA

A Near Real-Time Ranking Platform for Modern E-Commerce, from Zalando

A Method for Automated Task-Specific Dataset Creation and Model Fine-Tuning, from Ziegler et al.

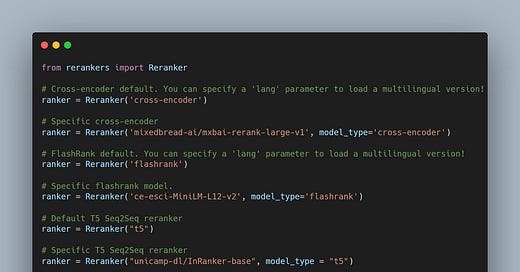

A Lightweight Framework for Combining Domain Experts in Information Retrieval, from Lee et al.

Dynamically Determining Compression Rates for Improved RAG Performance, from Zhang et al.

A Comprehensive Study of Pooling and Attention Strategies for Optimizing LLM-Based Embedding Models, from HKUST

Unbiasing Short-Video Recommenders through Causal Inference, from Dong et al.

Non-Parametric Embedding Fine-Tuning for Improved k-NN Retrieval, from Zeighami et al.

A High-Performance Batch Query Architecture for Industrial-Scale Recommendation Systems, from Zhang et al.

Correcting Stale Embeddings for Computationally Efficient Dense Retrieval, from Google DeepMind

Keep reading with a 7-day free trial

Subscribe to Top Information Retrieval Papers of the Week to keep reading this post and get 7 days of free access to the full post archives.