A Foundation Model for Generative Recommendation, A Unified Definition of Hallucination, and More!

Vol.137 for Dec 29, 2025 - Jan 04, 2026

Stay Ahead of the Curve with the Latest Advancements and Discoveries in Information Retrieval.

This week’s newsletter highlights the following research:

A Foundation Model for Generative Recommendation, from Kuaishou

A Unified Definition of Hallucination, from Liu et al.

Fine-Tuning Small Language Models for E-Commerce Agent Optimization: A PayPal-NVIDIA Collaboration, from Sahami et al.

Evaluating the Factuality Gap in Text-Based Explainable Recommender Systems, from Kabongo et al.

Enhancing Item-to-Item Recommendations with LLM-Based Data Generation and Filtering, from Alibaba

A Selective Regularization Framework for Context-Aware LLM Knowledge Integration in Recommendation Systems, from Yang et al.

Overcoming Inference Bottlenecks in Generative Slate Recommendation via Hierarchical Planning, from Tencent

Efficient Billion-Scale ANNS via Hierarchical I/O Governance Under Semantic Skewness, from Huan et al.

Instruction-Following Generative Recommendation with Fast-Slow Thinking, from JD[.com]

The Challenge of Teaching LLMs to Admit Ignorance, from Google Research

[1] OpenOneRec Technical Report

The OpenOneRec technical report from Kuaishou introduces a foundation model framework that bridges recommendation systems with LLMs. The report contains three main contributions:

RecIF-Bench, a comprehensive benchmark evaluating 8 diverse tasks across semantic alignment, fundamental recommendation, instruction following, and reasoning capabilities, accompanied by a dataset of 96 million interactions from 160,000 users spanning short-video, e-commerce, and advertising domains.

A fully open-sourced training pipeline implementing itemic tokenization (representing items as hierarchical discrete tokens), two-stage pre-training combining recommendation data with general knowledge, and a three-phase post-training strategy involving supervised fine-tuning, on-policy distillation to preserve general capabilities, and reinforcement learning optimized for ranking metrics.

OneRec-Foundation models (1.7B and 8B parameters) that achieve state-of-the-art results across all RecIF-Bench tasks while maintaining general reasoning abilities, with the 8B model demonstrating exceptional cross-domain transferability.

The work empirically validates scaling laws specific to recommendation, revealing a more data-intensive scaling regime than general language models, and shows that multi-domain joint training significantly outperforms domain-specific approaches for foundation models.

📚 https://arxiv.org/abs/2512.24762

👨🏽💻 https://github.com/Kuaishou-OneRec/OpenOneRec

🤗 https://huggingface.co/OpenOneRec

[2] A Unified Definition of Hallucination, Or: It’s the World Model, Stupid

This paper from Liu et al. proposes a unified definition of hallucination in language models as inaccurate internal world modeling that manifests observably to users. The authors argue that existing definitions across different domains represent different instantiations of the same underlying framework. The authors formalize this through a reference world model W = (S, H, R) comprising world states, interaction histories, and constraining rules, along with a view function V determining what information is accessible, a conflict resolution policy P, and a truth function T_{W,P} that labels atomic claims as true, false, or unknown. By explicitly specifying these components, the framework distinguishes genuine hallucinations (world-modeling errors where claims contradict the reference world) from planning errors or incentive misalignments. It provides a common evaluation language across disparate benchmarks and enables the creation of large-scale synthetic benchmarks where hallucination labels can be computed automatically from fully-specified environments like chess, NetHack, or simulated codebases. The paper also demonstrates how different prior definitions emerge from varying choices of W (source documents vs. knowledge bases vs. environment states), V (full context vs. retrieved snippets vs. partial observations), and P (document-override vs. parametric-priority policies).

📚 https://arxiv.org/abs/2512.21577

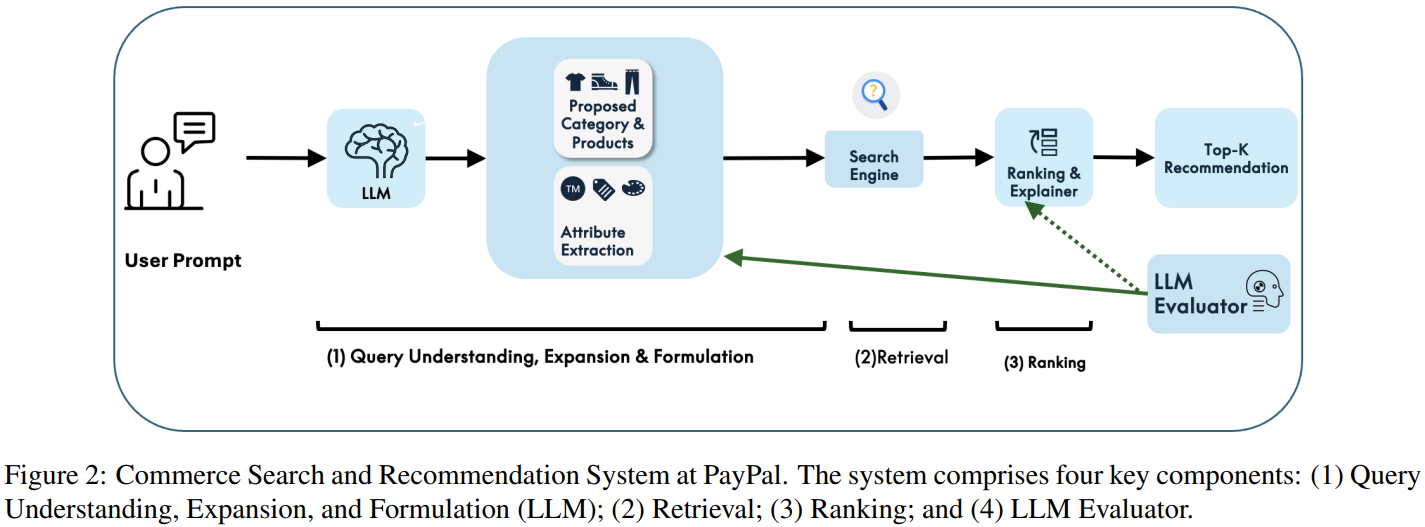

[3] NEMO-4-PAYPAL: Leveraging NVIDIA’s Nemo Framework for empowering PayPal’s Commerce Agent

This paper from Sahami et al. presents NEMO-4-PAYPAL, PayPal’s Commerce Agent system developed in partnership with NVIDIA to optimize e-commerce interactions through fine-tuned small language models. The researchers addressed a performance bottleneck where the search and retrieval component consumed over 50% of total system response time by replacing their base model with a fine-tuned Nemotron-8B SLM using NVIDIA’s NeMo Framework. Through systematic experimentation with 20 LoRA-based model variants exploring different hyperparameters, they achieved substantial improvements: 49% reduction in overall agent latency, 58% improvement in retrieval latency, and 45% reduction in GPU costs while maintaining competitive quality metrics. The architecture employs a multi-agent system that handles query understanding, retrieval, ranking, and personalized recommendations through LLM-powered approaches, specifically utilizing Hypothetical Document Embeddings (HyDE) for product retrieval. The study also explored Direct Preference Optimization (DPO) for further refinement and compared different inference optimization strategies across NVIDIA H100 and B200 GPUs with varying tensor parallelism configurations. This work establishes a scalable framework for production e-commerce AI systems and represents the first application of NVIDIA’s NeMo Framework to commerce-specific agent optimization.

📚 https://arxiv.org/abs/2512.21578

[4] On the Factual Consistency of Text-based Explainable Recommendation Models

This paper from Kabongo et al. investigates the factual consistency of text-based explainable recommendation systems, revealing a disconnect between surface-level text quality and factual accuracy. The authors develop a comprehensive evaluation framework that uses LLMs to extract atomic explanatory statements from user reviews, creating statement-topic-sentiment triplets that serve as ground truth for five Amazon Reviews categories (Toys, Clothes, Beauty, Sports, and Cellphones). They propose statement-level metrics combining LLM-based and NLI-based approaches to assess both precision (whether generated explanations are supported by evidence) and recall (whether they cover ground-truth content). Extensive experimentation uncovers an alarming gap: while models achieve high semantic similarity scores (BERTScore F1: 0.81-0.90), factuality metrics reveal drastically poor performance, with LLM-based statement-level precision ranging from only 4.38% to 32.88% and recall from 0.27% to 29.86%. This disparity demonstrates that current models generate fluent, contextually appropriate text that superficially appears explanatory but frequently hallucinates content unsupported by actual user preferences, highlighting fundamental limitations in existing evaluation practices that prioritize semantic similarity over factual grounding and underscoring the urgent need for factuality-aware architectures and training objectives in explainable recommendation systems.

📚 https://arxiv.org/abs/2512.24366

👨🏽💻 https://github.com/BenKabongo25/factual_explainable_recommendation

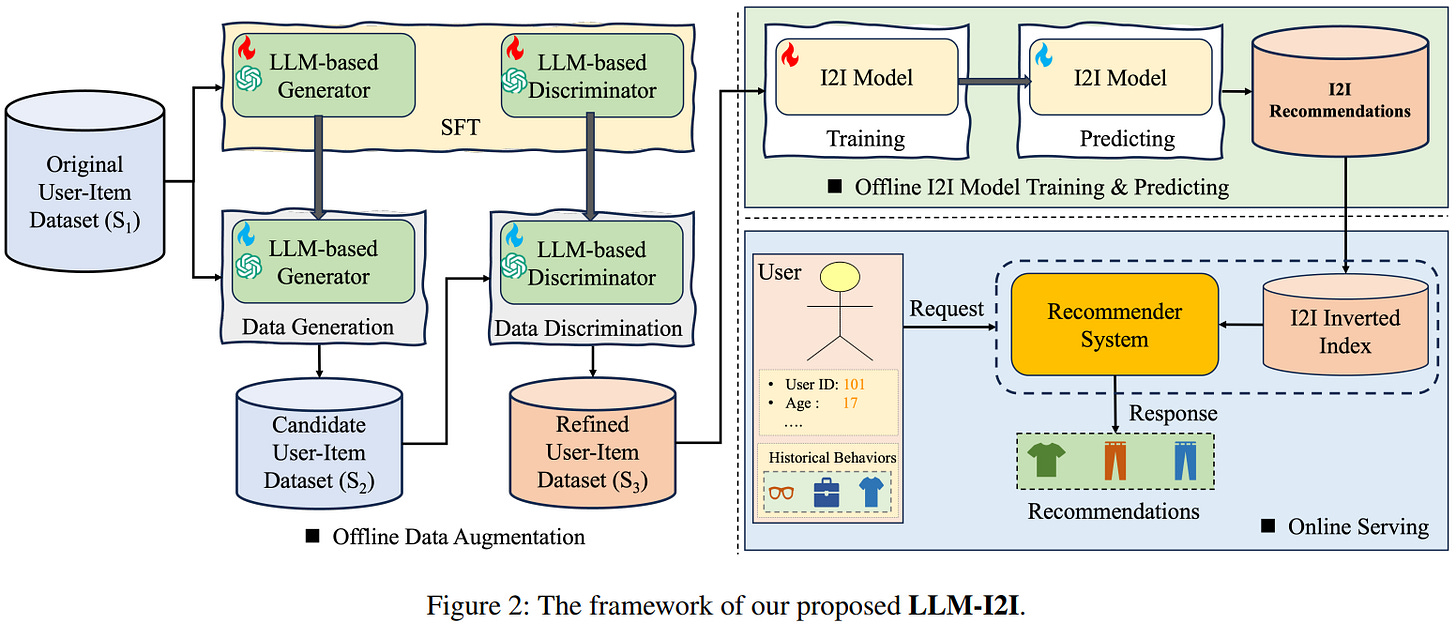

[5] LLM-I2I: Boost Your Small Item2Item Recommendation Model with Large Language Model

This paper from Alibaba introduces LLM-I2I, a data-centric approach that leverages LLMs to enhance Item-to-Item (I2I) recommendation systems and addresses data sparsity and noise issues. The method employs two key components: an LLM-based generator that synthesizes user-item interactions (particularly for long-tail items with limited historical data) using a specialized long-tail aware loss function, and an LLM-based discriminator that filters low-quality synthetic and real interactions to ensure data quality. Unlike model-centric approaches that require architectural changes and increased computational resources, LLM-I2I refines training data without modifying existing I2I models, making it cost-effective for production deployment. Extensive experiments on both academic datasets (Amazon Review Dataset) and an industrial billion-scale dataset from AliExpress demonstrate significant performance improvements across various I2I algorithms (BM25, BPR, YoutubeDNN, and Swing), with particularly strong gains for long-tail items.

📚 https://arxiv.org/abs/2512.21595

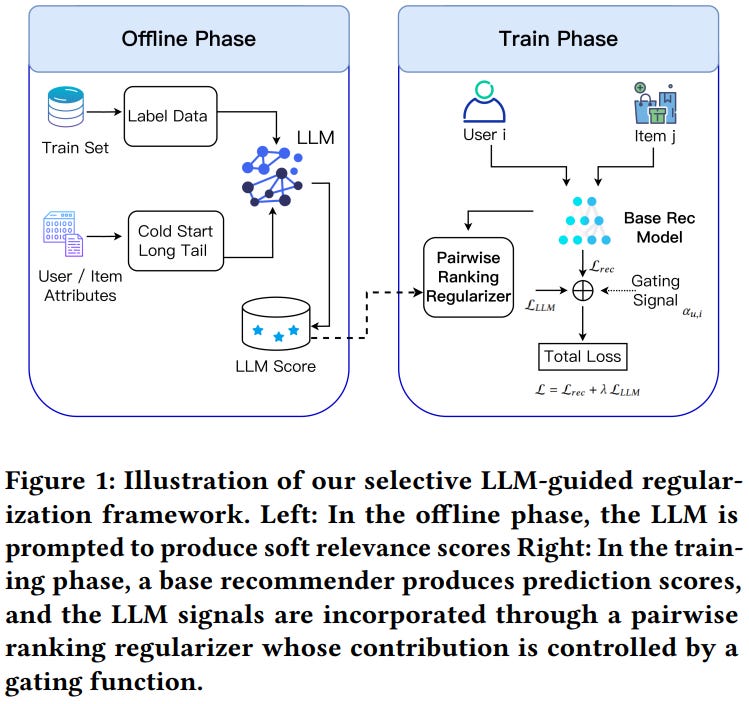

[6] Selective LLM-Guided Regularization for Enhancing Recommendation Models

This paper from Yang et al. introduces Selective LLM-Guided Regularization (S-LLMR), a model-agnostic framework that addresses the limitations of existing LLM-enhanced recommendation systems by selectively incorporating LLM signals only when they are predicted to be reliable. Current approaches that apply global knowledge distillation or use LLMs as standalone recommenders suffer from computational costs, position bias, and unreliable predictions across the user-item space. S-LLMR employs a trainable gating mechanism informed by user history length, item popularity, and model uncertainty to activate LLM-based pairwise ranking supervision only in contexts where LLMs demonstrate empirical advantages. The framework performs all LLM scoring offline using GPT-4o-mini to generate soft relevance scores, eliminating inference-time overhead, and particularly targets cold-start users and long-tail items through augmented candidate sets. Experiments across six diverse recommendation backbones (DeepFM, xDeepFM, AutoInt, DCNv1, DCNv2, and DIN) on three Amazon Review datasets demonstrate that S-LLMR consistently outperforms global distillation baselines like LLM-CF.

📚 https://arxiv.org/abs/2512.21526

[7] HiGR: Efficient Generative Slate Recommendation via Hierarchical Planning and Multi-Objective Preference Alignment

This paper from Tencent introduces HiGR (Hierarchical Generative Slate Recommendation). Existing generative recommendation systems for slate recommendation have limitations where users receive ranked lists of items simultaneously. The authors identify three critical challenges in such systems: semantically entangled item tokenization, inefficient sequential decoding requiring many inference steps, and a lack of holistic slate planning. HiGR addresses these through three main innovations:

A contrastive residual quantization autoencoder (CRQ-VAE) that creates semantically structured item IDs with clear hierarchical relationships.

A hierarchical slate decoder (HSD) that separates generation into a coarse-grained list-level planning stage followed by fine-grained item-level decoding, enabling parallel generation and reducing inference steps.

Odds ratio preference optimization (ORPO) for listwise preference alignment that directly optimizes slate quality using implicit user feedback across three objectives: ranking fidelity, genuine interest, and diversity.

Evaluated on Tencent’s commercial media platform, HiGR demonstrates improvement in recommendation quality with 5x faster inference compared to state-of-the-art methods, confirming its practical effectiveness for industrial-scale deployment.

📚 https://arxiv.org/abs/2512.24787

[8] OrchANN: A Unified I/O Orchestration Framework for Skewed Out-of-Core Vector Search

OrchANN from Huan et al. addresses inefficiencies in billion-scale out-of-core approximate nearest neighbor search (ANNS) systems that emerge under skewed semantic embeddings, particularly in RAG workloads. Existing systems suffer from three fundamental issues: uniform local indexing that mismatches cluster scales, coarse static routing that misguides queries and inflates probed partitions, and lossy pruning at both cluster and vector levels that triggers wasteful “fetch-to-discard” I/O patterns. OrchANN introduces a unified I/O orchestration model governing the entire route-access-verify pipeline through three innovations:

Auto-Profiler Guided Hybrid Indexing, which uses hardware cost models to assign heterogeneous local index types (Flat, Graph, or IVF) per cluster based on scale without costly data rebalancing.

Query-Aware Dynamic Graph Abstraction, which maintains a compact in-memory navigation graph refreshed via lock-free snapshot updates with hot regions derived from query trajectories.

Multi-Level Pruning for Hybrid Execution, which performs cluster reordering with early stopping and applies triangle-inequality bounds to filter candidates before issuing SSD reads.

Evaluated on five datasets and traditional benchmarks under strict 4GB memory constraints, OrchANN achieves up to 17.2x higher QPS and 25.0x lower latency than baselines (DiskANN, Starling, SPANN, PipeANN) while reducing SSD accesses by 3.5-7x and maintaining storage efficiency without replication.

📚 https://arxiv.org/abs/2512.22838

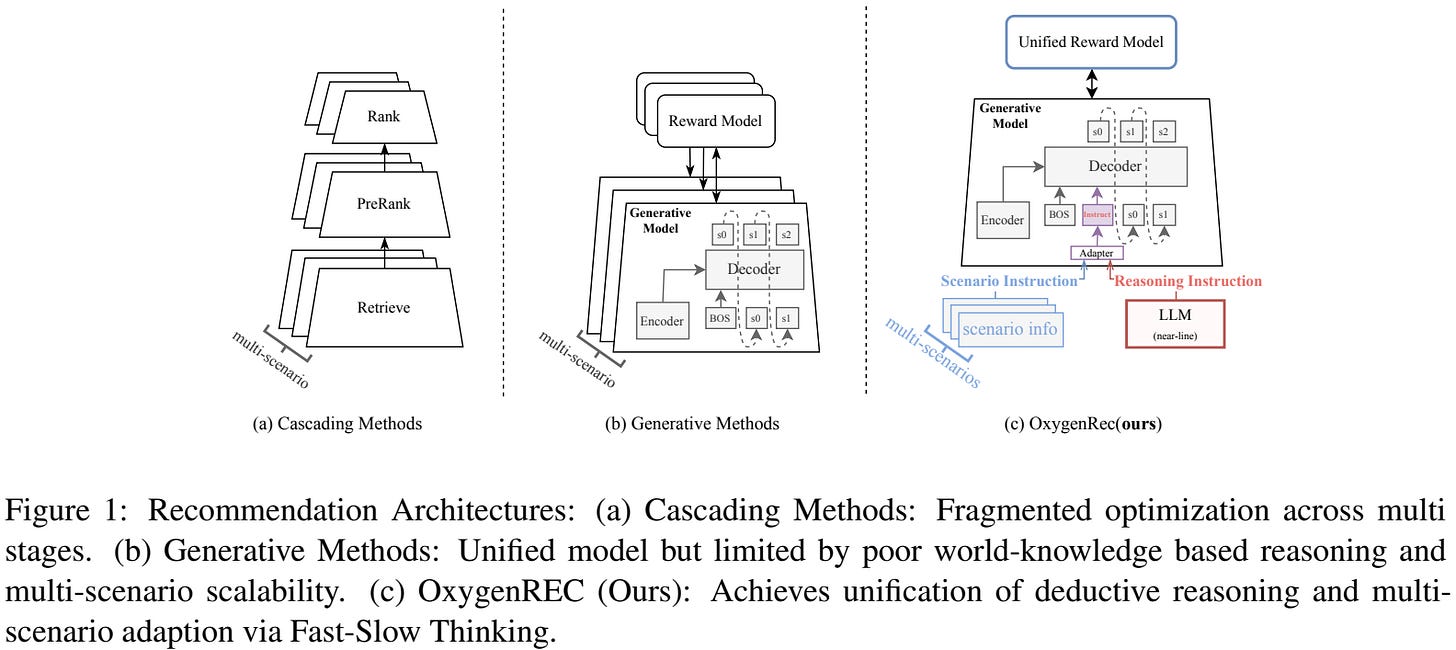

[9] OxygenREC: An Instruction-Following Generative Framework for E-commerce Recommendation

This paper from JD[.com] presents OxygenREC, an industrial-scale e-commerce recommendation system that addresses two challenges in generative recommendation: limited deductive reasoning capabilities and multi-scenario scalability. The system employs a Fast-Slow Thinking architecture where a near-line LLM pipeline generates Contextual Reasoning Instructions (slow thinking) that inject world knowledge and deductive reasoning, while a high-efficiency encoder-decoder backbone performs real-time item generation (fast thinking). To ensure effective instruction control, the framework introduces Instruction-Guided Retrieval (IGR) to filter relevant historical behaviors and Query-to-Item (Q2I) loss for semantic alignment between instructions and items. For multi-scenario adaptation, OxygenREC transforms scenario information into controllable instructions and uses unified reward mapping with Soft Adaptive Group Clip Policy Optimization (SA-GCPO) to align a single model across diverse business objectives, achieving a “train-once-deploy-everywhere” paradigm. The system represents items using multimodal Semantic IDs via residual quantization and leverages a unified PyTorch training framework achieving 40% Model FLOPs Utilization alongside the open-sourced xLLM inference engine for high-performance serving. Deployed across six core scenarios in JD.com covering the entire user lifecycle from homepage to checkout, OxygenREC demonstrated significant improvements, validating its effectiveness in bridging deep reasoning capabilities with strict industrial latency requirements while maintaining resource efficiency.

📚 https://arxiv.org/abs/2512.22386

[10] Retrieval Augmented Question Answering: When Should LLMs Admit Ignorance?

This paper from Google Research investigates the use of LLMs for retrieval-augmented question answering, specifically examining when LLMs should appropriately decline to answer due to insufficient information. The authors propose an adaptive prompting strategy that divides retrieved Wikipedia pages into smaller chunks and sequentially prompts the LLM with each chunk, allowing the model to either generate an answer if sufficient information is present or signal insufficiency and proceed to the next chunk. Experiments on three open-domain QA datasets (Natural Questions, TriviaQA, and HotpotQA) using Gemini 1.5 Pro demonstrate that this approach matches or outperforms standard prompting that uses the full context while reducing token usage by over 50%. The study reveals a critical limitation: when presented with insufficient information in “negative windows,” LLMs generate incorrect answers 54.3% of the time rather than declining to respond, representing a major source of error. Ablation studies show that sliding window order matters significantly; processing high-probability context first is essential since the model struggles to properly reject irrelevant passages. Notably, few-shot in-context learning fails to improve the model’s ability to abstain from answering when facing inadequate information, suggesting this “bias to answer” is deeply ingrained in instruction-tuned models and cannot be easily addressed through prompt engineering alone, indicating the need for training objectives that explicitly reward correct abstention.

📚 https://arxiv.org/abs/2512.23836

I hope this weekly roundup of top papers has provided you with valuable insights and a glimpse into the exciting advancements taking place in the field. Remember to look deeper into the papers that pique your interest.

I also blog about Machine Learning, Deep Learning, MLOps, and Software Engineering domains. I explore diverse topics, such as Natural Language Processing, Large Language Models, Recommendation Systems, etc., and conduct in-depth analyses, drawing insights from the latest research papers.