A Comprehensive Review of State-of-the-Art Universal Text Embeddings, Measuring Question Difficulty in Retrieval-based QA, and More!

Vol.55 for Jun 03 - Jun 09, 2024

Stay Ahead of the Curve with the Latest Advancements and Discoveries in Information Retrieval.

This week’s newsletter highlights the following research:

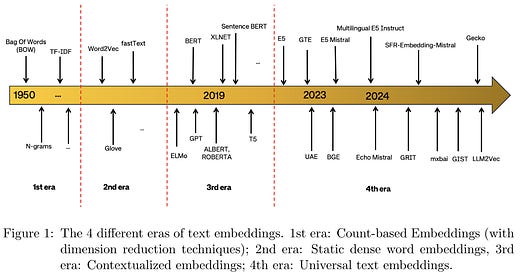

A Comprehensive Review of State-of-the-Art Universal Text Embeddings, from Cao et al.

An Approach to Structurally Aligning Visual and Textual Embeddings, from Alibaba

Leveraging Dynamic Granularity for Improved Retrieval-Augmented Generation, from Zhong et al.

A Framework for Consistent and Reliable LLM-based Passage Ranking, from OSU

Boosting Sample Efficiency in Recommender Systems through Large Language Model Integration, from Huawei

A Task-Specific Loss Function for Improved Multi-Task Learning Performance in Online Advertising, from Huawei

Robust Interaction-Based Semantic Relevance Calculation for Large-Scale E-commerce Search Engines, from Alibaba

Comparing Auto-Encoding and Auto-Regression Models in Sequential Recommendation, from Wang et al.

An Unsupervised Pipeline for Measuring Retrieval Complexity and Classifying Difficult Questions in QA, from Amazon Alexa AI

Keep reading with a 7-day free trial

Subscribe to Top Information Retrieval Papers of the Week to keep reading this post and get 7 days of free access to the full post archives.